Kaggle Digit Recognizer series

For this first attempt at getting actual Kaggle classification results the neural network from the Machine Learning course will only be changed in its input size dimension. A single hidden layer with 25 units is used. The default of 50 iterations for training and lambda of 1 for regularization will be used.

A little code rearrangement is in order here too. Some things in ex4.m aren’t needed (parts 2 through 5). Most of its remaining logic gets moved in to trainNN.m and a few lines are added to save the trained Theta matrices. New is runNN.m which loads Thetas, runs sample data through the network, and saves results in a Kaggle submission friendly format like so:

%% runNN.m

thetaFile = 'Thetas.mat';

testDataFile = 'test.mat';

resultsFile = 'results.csv';

% load trained model theta matrices

load(thetaFile);

% load test data

load(testDataFile);

% predict outputs from test samples

pred = predict(Theta1, Theta2, Xtest);

% change 10 labels back to 0 for Kaggle results

pred(pred==10) = 0;

% save predicted results

outfd = fopen(resultsFile, 'w');

fprintf(outfd, 'ImageId,Label\n');

for n = 1:size(pred)

fprintf(outfd, '%d,%d\n', n, pred(n));

end

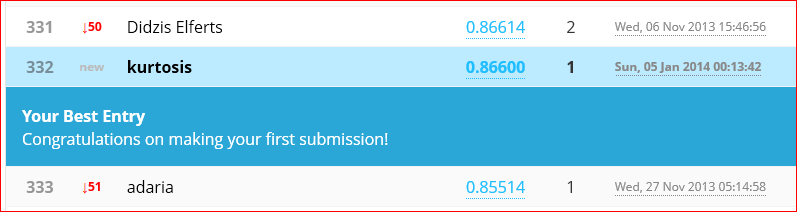

fclose(outfd);Initial training of the net was done with all 42,000 samples. Splitting the data in to training and validation sets will be done later. After 50 training iterations the cost function value was 1.19. The network self-classified its training data with 87.698% accuracy. The initial Kaggle submission of the test data set achieved 86.600% accuracy, well behind the two Kaggle sample solutions with lots of room for improvement.

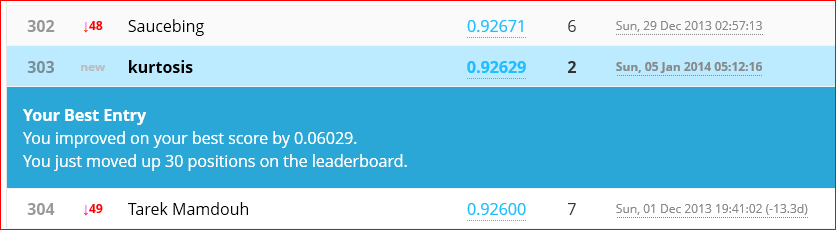

For an unguided attempt at improving on this result I trained a new network with 300 hidden nodes (still single layer) over 5000 iterations. Final self-classified accuracy was 100% and cost function was 0.0192. Run time for this was about 4200 seconds vs 10 seconds for the previous attempt. The Kaggle submission from this net was 92.629% accurate.