The Kronecker Product is a snazzy bit of linear algebra that I don’t use often enough to remember exactly how it works. I have most recently run in to it during an excellent course on machine learning using Spark MLlib. There it was used to simplify a variety of data set feature samplings (e.g. grouped offsets within a time series, aggregate blocks in a series). I have also used it in previous data science flavored courses.

The first example in the course lab material was straight forward enough:

$$ \begin{bmatrix} 1 & 2 \\\ 3 & 4 \end{bmatrix} \otimes \begin{bmatrix} 1 & 2 \end{bmatrix} = \begin{bmatrix} 1 \cdot 1 & 1 \cdot 2 & 2 \cdot 1 & 2 \cdot 2 \\\ 3 \cdot 1 & 3 \cdot 2 & 4 \cdot 1 & 4 \cdot 2 \end{bmatrix} = \begin{bmatrix} 1 & 2 & 2 & 4 \\\ 3 & 6 & 4 & 8 \end{bmatrix} $$

But the second example starts to get obscure:

$$ \begin{bmatrix} 1 & 2 \\\ 3 & 4 \end{bmatrix} \otimes \begin{bmatrix} 1 & 2 \\\ 3 & 4 \end{bmatrix} = \begin{bmatrix} 1 \cdot 1 & 1 \cdot 2 & 2 \cdot 1 & 2 \cdot 2 \\\ 1 \cdot 3 & 1 \cdot 4 & 2 \cdot 3 & 2 \cdot 4 \\\ 3 \cdot 1 & 3 \cdot 2 & 4 \cdot 1 & 4 \cdot 2 \\\ 3 \cdot 3 & 3 \cdot 4 & 4 \cdot 3 & 4 \cdot 4 \end{bmatrix} = \begin{bmatrix} 1 & 2 & 2 & 4 \\\ 3 & 4 & 6 & 8 \\\ 3 & 6 & 4 & 8 \\\ 9 & 12 & 12 & 16 \end{bmatrix} $$

Using the same values in each input matrix makes it confusing as to what operand has come from where. This would be so much clearer to me if unique numbers were used for the inputs:

$$ \begin{bmatrix} 1 & 2 \\\ 3 & 4 \end{bmatrix} \otimes \begin{bmatrix} 5 & 6 \\\ 7 & 8 \end{bmatrix} = \begin{bmatrix} 1 \cdot 5 & 1 \cdot 6 & 2 \cdot 5 & 2 \cdot 6 \\\ 1 \cdot 7 & 1 \cdot 8 & 2 \cdot 7 & 2 \cdot 8 \\\ 3 \cdot 5 & 3 \cdot 6 & 4 \cdot 5 & 4 \cdot 6 \\\ 3 \cdot 7 & 3 \cdot 8 & 4 \cdot 7 & 4 \cdot 8 \end{bmatrix} = \begin{bmatrix} 5 & 6 & 10 & 12 \\\ 7 & 8 & 14 & 16 \\\ 15 & 18 & 20 & 24 \\\ 21 & 24 & 28 & 32 \end{bmatrix} $$

Which is the approach used on the Kronecker Wikipedia page. But I still want to see this more clearly and without needing to be shown the intermediate computations:

$$ \begin{bmatrix} 1 & 0 & 0 \\\ 0 & 1 & 0 \\\ 0 & 0 & 1 \end{bmatrix} \otimes \begin{bmatrix} 1 & 2 \\\ 3 & 4 \end{bmatrix} = \begin{bmatrix} 1 & 2 & 0 & 0 & 0 & 0 \\\ 3 & 4 & 0 & 0 & 0 & 0 \\\ 0 & 0 & 1 & 2 & 0 & 0 \\\ 0 & 0 & 3 & 4 & 0 & 0 \\\ 0 & 0 & 0 & 0 & 1 & 2 \\\ 0 & 0 & 0 & 0 & 3 & 4 \end{bmatrix} $$

Hey, look at how that 1-2-3-4 block gets replicated! But what about all those multiplications? OK, try this:

$$ \begin{bmatrix} 1 & 0 & 0 \\\ 0 & 5 & 0 \\\ 0 & 0 & 10 \end{bmatrix} \otimes \begin{bmatrix} 1 & 2 \\\ 3 & 4 \end{bmatrix} = \begin{bmatrix} 1 & 2 & 0 & 0 & 0 & 0 \\\ 3 & 4 & 0 & 0 & 0 & 0 \\\ 0 & 0 & 5 & 10 & 0 & 0 \\\ 0 & 0 & 15 & 20 & 0 & 0 \\\ 0 & 0 & 0 & 0 & 10 & 20 \\\ 0 & 0 & 0 & 0 & 30 & 40 \end{bmatrix} $$

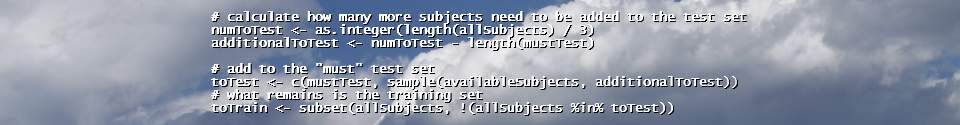

In Python’s numpy the function is named kron. In R it is named kronecker and %x% can be used in place of the full name (in keeping with R’s tradition of being weird).